What Works, What Kills What, and Why It Matters

By Mike Rea

In pharmaceutical R&D, where billions ride on the flip of a biological coin, decision frameworks aren’t just nice-to-haves – they’re the rules that separate the winners from the not-so-greats.

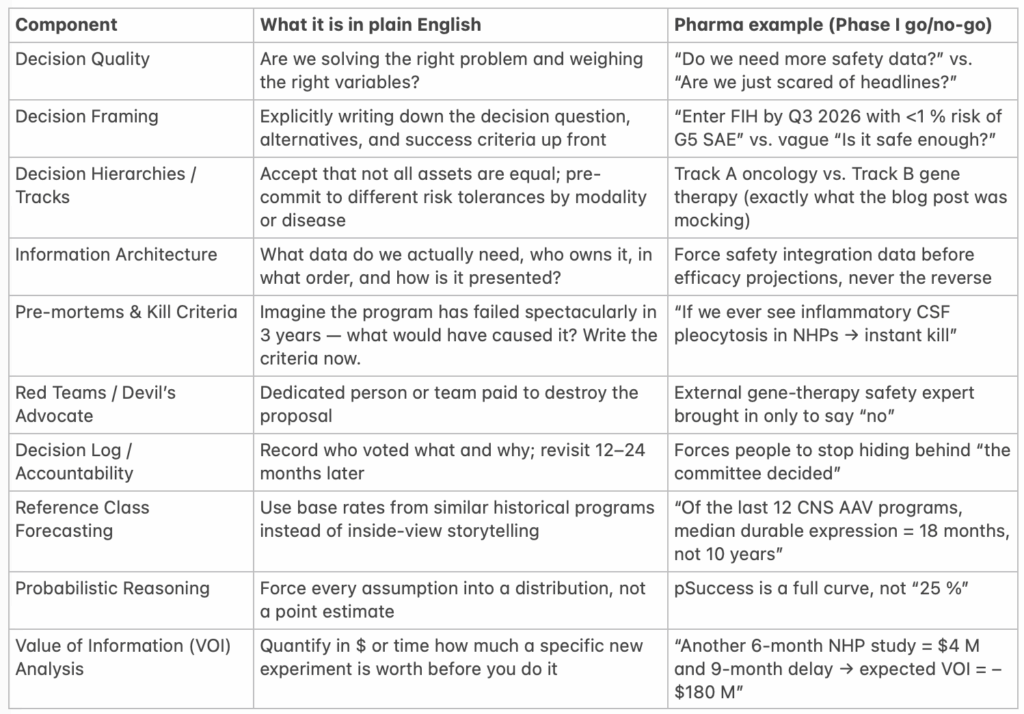

Decision Intelligence Frameworks

(a quick, pragmatic guide for people who actually have to make high-stakes R&D decisions)

I’ve written before about the decision risk in having one decision methodology for all phase I assets. While there is inevitable risk in pharma’s phase I decisions, there is zero attributed risk to the decision process – that is, 100% faith in it being the perfect/ best decision process, with no other processes considered.

“Uniform quantitative criteria are anti-innovation…” Requiring the same pSuccess and NPV bar for a $2B one-time gene therapy as for a $400M peak-sales oral forces you to either (a) torture the model or (b) kill genuinely transformative programs.

In reality, early-phase pharma decision-making is anything but uniform.

A first-in-human (FIH) dose-escalation call for a small-molecule cytotoxic isn’t governed by the same risk tolerance, evidentiary bar, or stakeholder dynamics as, say, a phase I/II CAR-T or an AAV gene therapy with potential lifelong effects.

Yet many companies do pretend (or aspire) to have “one decision-making process” for all phase I go/no-go decisions, usually some variation of Target Product Profile (TPP) + Probability of Technical Success (PTS) + net present value (NPV) cutoffs. (Yes, I’ve written many times about the McK-like nonsense of TPPs and eNPVs…)

Here’s how the “One Process to Rule Them All” usually looks in real life, and why it quietly fails in practice:

The Standard Corporate Template (the fiction)

- Preclinical package → Human Dose Projection (MABEL/PAD/MRD)

- TPP defined (almost always too optimistic)

- Quantitative decision criteria

- Go if predicted human dose < X mg (or < Y µg/kg for biologics)

- Go if eNPV > $500M and pSuccess > 15–20 %

- Go if competitive intensity score < 4/10

- Governance: Portfolio Review Committee votes by coloured cards (green/yellow/red) after a 45-minute deck review.

Where it cracks immediately

- Cytotoxic oncology (2025 version)

You’ll tolerate 10⁻⁴ risk of death in phase I because the patients are end-stage and the bar is response rate > 20 %. Your MTD-finding 3+3 design is fine. - Intra-thecal antibody or brain-penetrant small molecule for Alzheimer’s

Same process, same committee, same NPV cutoff. But now a single serious neuro adverse event in a 68-year-old with prodromal AD kills the program politically, even if it’s not dose-limiting by protocol. The risk tolerance is 100× lower. - One-time curative gene therapy (e.g., hemgenix-like price aspirations)

You need 15-year durability projections from 9-month mouse data. Your pSuccess is a coin flip dressed up in Bayesian clothing, but the same 20 % hurdle is applied as if it were another oral TYK2 inhibitor. - Radiopharmaceutical with alpha emitter

The dosimetry models have 5× uncertainty, the therapeutic window is a knife-edge, but the deck still has to show “projected dose < 2 Gy to kidneys” with a straight face.

The unspoken two-track reality (that everyone knows, but few admit)

Track A – “Franchise oncology and rare oncology”

Decision = “Do we think it can kill tumor cells without killing the patient too fast?”

Everything else is theater.

Track B – Everything else (CNS, chronic non-oncology, gene therapy, pediatric, etc.)

Decision = “Can we convince ourselves and regulators that the risk of a catastrophic safety event in phase I is < 1 in 10,000?”

NPV is a tiebreaker only after safety concern is satisfied.

Decision Intelligence is the disciplined practice of combining three things that are normally kept in separate silos:

- Analytical rigour (data, models, probabilities)

- Behavioural awareness (how humans actually decide under uncertainty, politics, career risk)

- Process design (how you structure meetings, information flow, and accountability so the first two don’t get mushed together)

Decision Intelligence originated in tech/ data-science circles (Google, BCG Gamma, etc.), but it maps perfectly onto early-phase pharma portfolio decisions.

Core Components of Any Serious Decision Intelligence Framework

*NHP = Non-Human Primate…

Frameworks People Actually Use in Pharma / Biotech (2025 edition)

(Caveat – these are public frameworks, not something I’ve seen in confidence…)

- RAVE™ (Genentech/Roche)

Risk, Alignment, Value, Executability – scored 1–9 with forced distribution. Explicitly separates “strategic fit” from pure NPV. - DICE (Pfizer)

Decision-making In Complex Environments – heavy on pre-mortems, reference-class base rates, and mandatory decision logs. - Kepner-Tregoe (old but still alive at GSK, Sanofi)

Very structured: Is/Is-Not analysis, decision matrix, potential problem analysis. - McKinsey Decision Tree / Issue Tree approach

Brutally forces MECE framing and explicit tracks. - Bayer’s “Decision Boards” + “Kill Early, Kill Often” mantra

Two-stage: technical feasibility board first (scientists only), then commercial board. - Asymmetric / Two-Track Model (what the blog post is really advocating)

Stop pretending; just admit you run oncology like a different company from gene therapy.

The One-Page Decision Intelligence Checklist I’d Give to Portfolio Teams

Before you walk into any phase I governance meeting, can you answer YES to all of these?

- Decision question written in one sentence

- Explicit success criteria and kill criteria written down

- Risk tolerance track declared (Track A/B/C…)

- Base-rate reference class presented (what actually happened to the last 10 similar programs)

- Pre-mortem completed and mitigations listed

- Value of perfect information calculated for any proposed new studies

- Someone in the room is formally assigned to argue the opposite case

- Decision and rationale will be logged and revisited in 18 months

If you can’t tick every box, you are not making an intelligent decision. You’re just performing one with better PowerPoint.

That’s what decision intelligence actually looks like when you strip away the consulting jargon: ruthless honesty about risk asymmetry, enforced base rates, and a process that makes it hard to hide behind spreadsheets.

An Important Note on Decision Risk vs. Decision Intelligence

The single-process illusion doesn’t just hide safety risk; it quietly strangles ambition.

When every program is forced through the same NPV ≥ $500 M and pTS ≥ 18 % filter, the easiest way to pass is to shrink the vision until it fits.

- A potentially curative AAV gene therapy for a blinding disease gets re-framed as “visuomotor improvement by +12 letters at year 2” because that’s what the model recognises as “realistic.”

- A brain-penetrant degrader that could actually modify Alzheimer’s is re-scoped to “mild cognitive impairment, adjunct to donepezil” because disease-modification is apparently unmodellable and therefore worth zero in the spreadsheet.

The universal framework is quietly optimised for the median oncology small molecule, not the outlier that might actually change medicine. It rewards predictable mediocrity and punishes asymmetric upside.

True decision intelligence does the reverse: it forces you to ask, out loud, “What would have to be true for this to be a ten-billion-dollar, decade-defining medicine?” and then designs the experiments and risk tolerances around that ambition instead of trimming the ambition to match the experiments you already have.

Until we are willing to say “This program is allowed to have a 9 % technical success probability because the efficacy, if it hits, rewrites the specialty,” we are not allocating capital intelligently.

We are just safety-washing incrementalism in the clothing of rigour.

Green doesn’t always mean go. Sometimes it just means “safe enough to be average.”

I’ve argued before that pharma might, if it wants to de-risk early phase decisions, at least try a second process – even on 10% of the portfolio. The decision not to is an active decision…

Until we are willing to say out loud “this process that we, and most other companies, use is not, and never has been, fit for the early phase portfolio,” we are not making decisions. We are performing them.

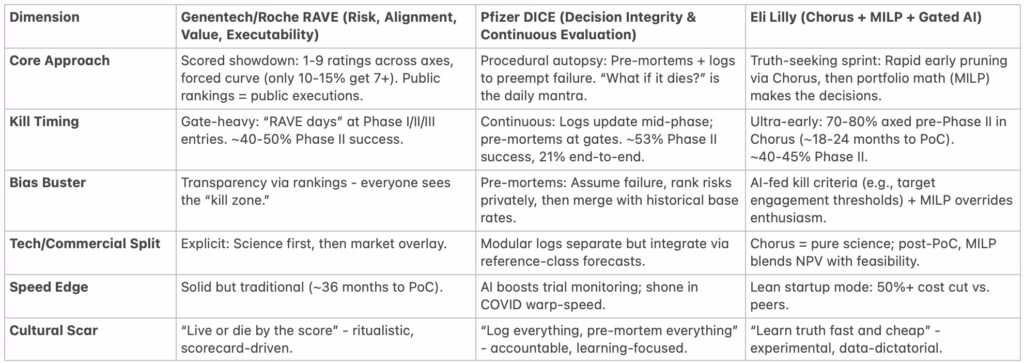

Decision Frameworks in Pharma: From RAVE and DICE to Lilly’s Truth-Seeking Machine

We’ve all seen the headlines: Lilly’s GLP-1 juggernaut (Mounjaro and Zepbound bringing in over $20B in 2024 alone, and building the industry’s first $1 trillion company) versus the industry’s dismal 10-15% end-to-end success rate. But what’s the secret? Is it a scored ritual like Genentech’s RAVE, Pfizer’s pre-mortem autopsy in DICE, or something more… asymmetric?

The rest of this post distills a recent X thread sparked by the start of the post. (A shoutout to @biobrainbox for the follow-up question on correlations to success rates.) I’ll compare the big three frameworks – Genentech/Roche’s RAVE, Pfizer’s DICE, and Eli Lilly’s integrated beast (Chorus + MILP optimization) – drawing lessons from my Substack posts on asymmetric bets and innovation traps. And, my 2023 IDEA Collider interview with Dan Skovronsky, Lilly’s CSO, who discussed “truth-seeking” over wishful thinking. (If you haven’t watched it, you should: IDEA Collider | Dan Skovronsky. It’s 45 minutes that everyone should watch.)

MILP at Eli Lilly stands for Mixed-Integer Linear Programming.

MILP is a computational optimization technique that Lilly employs for portfolio-wide R&D planning and resource allocation. It models complex scheduling problems – such as balancing timelines, budgets, manufacturing capacities, and risk probabilities across their small-molecule and biologics pipelines – as mathematical equations to generate go/no-go recommendations and automated resource shifts. For instance, it can reallocate funding from underperforming oncology projects to high-NPV incretin assets based on updated success probabilities.

This approach was formalized in a 2020 collaboration with Carnegie Mellon University and is central to Lilly’s decision model, helping drive their ~25% end-to-end clinical success rate (vs. industry ~10-15%) by prioritizing maths over gut-feel (‘gut feel’ is often hidden by management consultancy-speak in many companies). It’s detailed in the paper “Portfolio-wide Optimization of Pharmaceutical R&D Activities” by Lilly researchers, available on Optimization Online.

The truth is that no framework is a silver bullet, but the ones that correlate with outsized wins (Lilly’s ~25% success rate, Pfizer’s pandemic pivots) share a core: early kills, maths over mood, and mechanisms to fight human bias. (I think it is worth crediting AstraZeneca for being an early leader with their 5Rs model (now 6Rs) as recorded in my interview with their previous Head of R&D, Mene Pangalos).

The Frameworks: A Side-by-Side Comparison

Pharma’s decision tools aren’t born in vacuums – they’re tested against Phase II attrition rates that compare badly to gambling. Here’s how the best stack up, based on public documents and posts, ex-employee writings, and my chats with insiders.

(Sources: NRDD 2012 on Chorus; Optimization Online 2020 on Lilly MILP; DIA talks on DICE; Genentech alumni blogs on RAVE.)

RAVE looks more like a gladiatorial arena – brutal and visible. DICE looks more like a lab review, dissecting risks before they escalate. Lilly? A venture studio, betting small to win big (echoing my Substack on winning small = winning big).

Spotlight: Pfizer’s Pre-Mortems – The DICE Dagger

If DICE has a killer app, it’s the pre-mortem. I have written for a long time about the pre-mortem, and its value, so it is nice to see it out in the world. (There’s also a video here…)

Mandatory at every gate (and ad-hoc for red flags), it’s used here for triage. Note: triage vs post-mortem – the right way to use Asymmetric Learning for good. Here’s the playbook, straight from Pfizer’s public statements:

- Fail Forward: Assume it’s 3 years out, and the project’s DOA. “What killed it?”

- Silent Storm: 10-15 mins of solo brainstorming – biology misses, safety scares, competitor ambushes. No chit-chat, no hierarchy.

- Rank & Reveal: Top 3-5 failure modes per person, then round-robin share. Repetition = signal.

- Reality Check: With base rates from Pfizer’s vault (e.g., “18% success for this mechanism”). Hard-headed calibration.

- Action or Die: Assign owners, experiments, deadlines. Log everything – audit trail for accountability.

- Rinse, Repeat: Logs live forever; unaddressed fears = career coffins.

It is possibel that this saved Pfizer’s COVID bacon. Early 2020 pre-mortems flagged Covid variant escape, which gave rise to parallel manufacturing before Delta emerged. It’s why their success rates flipped from laggard (2% end-to-end in 2010) to leader, and one of the fastest and best decision makers in that period. Lesson? Pre-mortems harness imagination against optimism bias – the silent killer of 70% of late-stage flops.

Lilly’s Asymmetric Edge: Lessons from Dan Skovronsky

No framework chat is complete without Lilly (although my last post excluded them, while I looked for more evidence), the 2025 productivity and innovation beast (5+ approvals, 40% revenue from fresh launches – see my post Take one pharma company…). Their model’s no monolith: It’s Chorus (early “truth-seeking” unit, killing 70%+ on hard data) feeding MILP (the portfolio optimizer, detailed above, that reallocates ruthlessly) and AI gates (Magnol.AI predicting outcomes).

In my interview with Dan Skovronsky (timestamp ~15:00), he said: “We’re not in the business of hope; we’re in the business of evidence.” Chorus, born in 2003 to outpace Genentech, runs like a biotech accelerator: Nominate candidates, hit milestones (first-in-human, efficacy signals), exit fast if biology doesn’t come through. Result? Phase II success ~40%, costs halved. Dan credits it for tirzepatide’s dual-mechanism optionality – multiple paths to glory, minimal downside (straight out of my asymmetric bets playbook).

But here’s the Skovronsky phrase that struck me (~28:00): “Decision-making is where we lose the most. It’s not the science – it’s admitting when we’re wrong.” That echoes my Substack on sticking with failures: Phase I’s the real money pit, where wrong calls compound into billions lost. Lilly fixes it with “gardener” leadership (per Safi Bahcall’s framework in Pharma’s worst bet) – nurture diverse shoots, prune without ego. No senior leadership anointing losers.

Is there a correlation to their success? Lilly’s hotter streak (GLP-1 dominance) suggests yes – their model is modern: Speed + maths > ritual alone. But as Dan warned (~35:00), “Even we get it wrong. The key is learning faster than competitors.” (I’d like to highlight that – the industry’s most successful head of R&D points to the same key that this blog is based upon…)

Asymmetric Lessons for Your Portfolio

From my Substack, here’s the meta-framework – because no off-the-shelf tool survives contact with reality unchanged:

- Bet Asymmetric: Like Lilly’s tirzepatide (dual GLP-1/GIP), design for multiple wins, low maximum loss. (See Winning small = winning big.)

- Escape the Moses Trap: Ditch top-down decrees for diverse input. Bahcall’s “loon shots” need gardeners, not kings (Pharma’s worst bet).

- Pre-Mortem Religiously: Steal from DICE – it’s the ultimate bias mitigator. Pair with RAVE’s transparency for no-gos that stick.

- Truth Over Triumph: Follow Dan Skovronsky: Evidence gates early, maths late. AI’s a referee, not your cheerleader.

- Measure What Matters: Track Phase I kill rates, not just moves into Phase II. Fresh revenue % (Lilly’s 40% vs. J&J’s <5%) is your North Star (Take one pharma company…).

Pharma’s not broken – it’s biased. These frameworks prove discipline scales. But as I argued in Rethinking Pharma with Alex Telford, the real revolution is approving processes, not pills – unlocking scale for the next tirzepatide.